Glossary

Cluster:

A group of observations that are similar to each other based on a defined set of features. Clustering aims to partition the data into subsets with high internal similarity and high external dissimilarity.

HDBSCAN (Hierarchical Density-Based Spatial Clustering of Applications with Noise):

An advanced clustering algorithm that identifies clusters of varying density in the data. It also designates outliers, often as Cluster 0, which are considered noise.

K-means Clustering:

A partitioning algorithm that divides data into a pre-defined number of clusters by minimizing within-cluster variance.

PAM (Partitioning Around Medoids):

A clustering method that selects medoids as representative points and minimizes the sum of dissimilarities between each data point and its nearest medoid.

UMAP (Uniform Manifold Approximation and Projection):

A dimensionality reduction technique that preserves the local and global structure of the data while reducing it to 2D or 3D for visualization.

MCA (Multiple Correspondence Analysis):

A method for reducing the dimensionality of categorical data by representing it in a lower-dimensional space.

DW-NOMINATE:

A scaling method commonly used in political science to map voting behavior onto ideological dimensions, such as economic and social dimensions.

Silhouette Score:

A measure of how well each data point fits within its cluster. A higher score indicates better-defined clusters.

Davies-Bouldin Index:

A metric for evaluating clustering quality by measuring the ratio of within-cluster spread to between-cluster separation. Lower values indicate better clustering.

Calinski-Harabasz Index:

An index that evaluates clustering performance by comparing the dispersion of points within clusters to the dispersion between clusters. Higher scores are better.

Normalization:

A process to scale the data so that each feature contributes equally to the analysis, typically by adjusting the values to a standard range or scale.

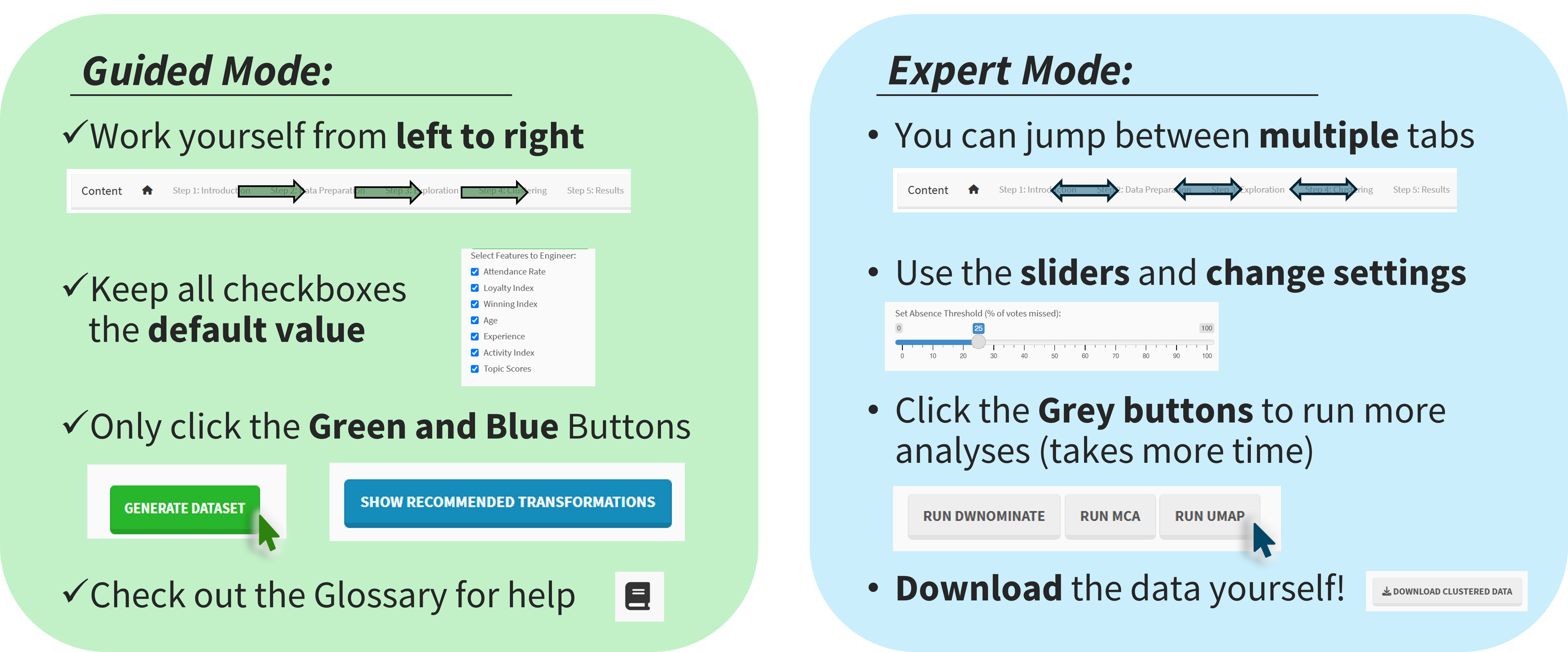

Feature Engineering:

The process of selecting, transforming, and creating features to improve the performance of machine learning models.

Complete Case Analysis:

A method for handling missing data by excluding observations with any missing values.

Radar Chart:

A graphical method of displaying multivariate data in the form of a two-dimensional chart of three or more quantitative variables represented on axes starting from the same point.

Box Plot:

A visualization technique for summarizing the distribution of a dataset and identifying potential outliers.

Interactive Parliament Plot:

A custom visualization that maps Members of the European Parliament (MEPs) and their respective parties onto a semicircular plot with clickable interactivity.

Correlation Coefficient:

A measure of the strength and direction of the linear relationship between two variables, ranging from -1 (perfect negative correlation) to +1 (perfect positive correlation).

R-squared (Coefficient of Determination):

Represents the proportion of variance in the dependent variable that is predictable from the independent variables.

Adjusted R-squared:

A modified version of R-squared that adjusts for the number of predictors in the model, penalizing the inclusion of irrelevant variables.

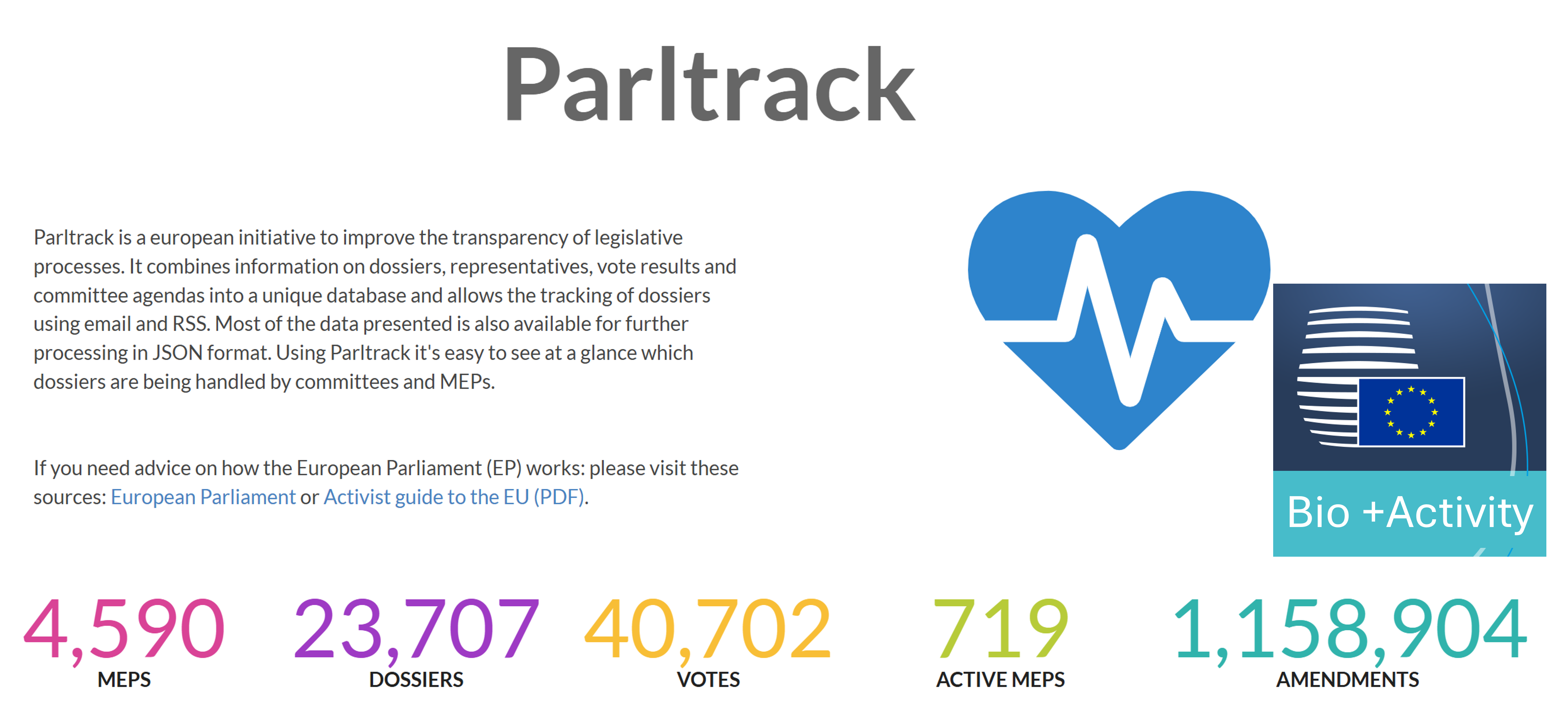

EPG (European Political Group):

The official grouping of Members of the European Parliament based on shared political ideologies.

MEP (Member of the European Parliament):

An elected representative in the European Parliament.

Voting Alignment:

A measure of how frequently MEPs or parties vote in line with one another.

Shiny:

An R framework for building interactive web applications and dashboards for data analysis and visualization.

reactable:

A package in R for creating interactive and customizable tables with advanced features.

ggplot2:

A widely used R package for creating elegant and versatile visualizations.

Cluster Stability:

The extent to which clusters remain consistent across different sampling or parameter settings, often tested through bootstrapping.

Outliers:

Data points that deviate significantly from the majority of observations, often identified and excluded in clustering.

Bootstrap Analysis:

A resampling method used to estimate the stability of clusters by creating multiple datasets through random sampling with replacement.